OpenTone: Accessibility-First Feedback Generator

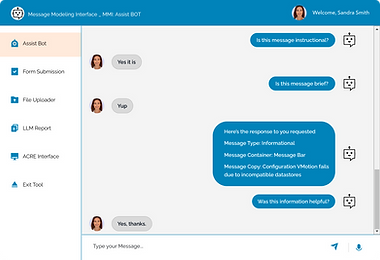

An independent continuation of the Message Modeling Interface (MMI) project.

Staff Product Designer, Design Systems • Interaction Design • Prompt-to-Component Prototyping • Self-Taught Vibe Coder

Watch the Full Walkthrough

OpenTone — Accessibility-First UI Feedback Generator

Summary

OpenTone is a tool I designed and built to make creating accessible feedback messages easier for designers and teams. Whether it’s a toast, banner, modal, or inline alert, OpenTone helps ensure that these common UI patterns are not only visually consistent but also accessible for all users.

The idea started with an earlier project called the Message Modeling Interface (MMI), originally conceived by Sherrie Byron Haber. MMI explored how AI could help generate the right wording for feedback messages, while also considering accessibility. When that project ended, I carried the seed forward by focusing on something more foundational: making sure the containers themselves, the toasts, banners, modals, and inline alerts, meet accessibility standards and provide a solid base for any future language support.

When that project ended, I carried the seed forward by focusing on something more foundational: making sure the containers themselves, the toasts, banners, modals, and inline alerts, meet accessibility standards and provide a solid base for any future language support.

Process Overview

While building OpenTone, I worked primarily inside Magic Patterns, rapidly iterating on layouts and components. But I quickly realized that prompts alone weren’t always the clearest way to describe nuanced UI adjustments.

To save time and avoid back-and-forth misinterpretations, I used the Magic Patterns Figma plugin to import screens directly into Figma. From there, I mocked up small UI refinements, like spacing, alignment, or control placement, and annotated them visually. I then re-uploaded those annotated mockups back into Magic Patterns along with a short description.

This hybrid workflow, combining prompting + visual mockups, let me communicate design intent more precisely, reduce ambiguity, and reach the right UI outcome faster.

What I Built: Feature Highlights

A functional, responsive web app that lets you type feedback messages and preview them in different UI formats (toast, banner, modal, inline).

At the heart of OpenTone is the accessibility sidebar, a real-time guide that helps designers check contrast, spacing, and container usage as they work. It acts like a built-in reviewer, catching issues early so that accessible design decisions become part of the workflow instead of an afterthought.

To give designers flexibility, OpenTone supports two modes (left column) of input:

➤ Chat Mode

Chat Mode takes a more conversational approach. Designers simply enter a header and body message, then apply it with a single click. It’s fast, lightweight, and feels natural, almost like jotting down an idea in real time.

This makes Chat Mode ideal for quick iterations or early explorations, when the goal is to test different wording or see how a message looks inside the chosen container without going through extra steps.

➤ Form Mode

Form Mode provides a more structured experience, guiding designers through a step-by-step flow. In the first step, they enter the message header and body, while also selecting details like the message intent, urgency level, and target audience.

The second step focuses on accessibility choices, designers can enable contrast modes, screen reader optimization, and other adjustments that ensure messages are inclusive.

Finally, in step three, OpenTone generates a clear summary view that pulls everything together. This structured path helps designers create consistent, well-considered feedback messages without missing important details.

➤ Preview Workspace

The center column is where designers see their work come to life. OpenTone provides four core message types, Toast, Banner, Modal, and Inline, each with its own preview tab. Designers can quickly switch between tabs to test how the same content adapts across different containers.

At the top of each preview is a suggestion space that offers real-time recommendations. For example, if a designer places a critical error inside a Toast, OpenTone may suggest using a Banner or Modal instead — helping align message type with best practices.

This setup makes it easy to explore context:

-

Toast for lightweight, dismissible alerts

-

Banner for higher-visibility notices across a page

-

Modal for critical or blocking interactions

-

Inline for embedded, in-context guidance

Each preview also includes export options to copy or download code snippets, so designers can hand off polished, consistent components directly to developers. Combined with the accessibility sidebar, the preview workspace ensures both usability and compliance are baked into every design decision.

➤ Accessibility Checker Panel

The right column acts as OpenTone’s accessibility coach. As designers build their messages, the panel runs automatic checks against WCAG 2.2 and ARIA standards. It flags issues like insufficient color contrast, missing screen reader labels, or spacing concerns.

What makes this section powerful is the ability to go beyond just spotting problems, designers can often apply instant fixes with a single click. For example, if contrast is too low, the panel suggests and applies a compliant color adjustment.

This real-time feedback helps designers catch accessibility issues early, build confidence in their choices, and ensure every message works for as many users as possible.

➤ Dark/Light Mode toggle for flexibility in different design environments.

➤ Export options that let users copy or download their messages directly.

➤ Live Deployed Version: turning what would normally be a design prototype into a real, usable tool

What I Learned

This project stretched me in new ways compared to my first VibeCoding experience with Orbit. With Orbit, I worked in V0.dev and then built everything locally in VS Code using Tailwind and Vite, a process that was rewarding but slow. OpenTone was different: by working in Magic Patterns, I could generate concepts quickly, test functionality, and roll back to previous versions if a direction wasn’t working. That ability to move back and forth between iterations saved a lot of time and reduced friction.

I also discovered the value of using the Magic Patterns Figma plugin. Exporting screens into Figma allowed me to mock up small adjustments visually and re-import them, which was often faster and clearer than trying to describe every detail in a text prompt. This hybrid workflow made communication with Magic Patterns much smoother.

Another lesson was about prompting itself. With GPTs, I often rely on natural, sometimes rambling prompts that the model can untangle. Magic Patterns, however, demanded concise, simple instructions. I learned that keeping prompts short, direct, and in plain language consistently gave me better results than over-explaining. It’s a small but important shift in mindset that I’ll carry forward.

Finally, I gained appreciation for using a design-first tool like Magic Patterns before diving into raw code. Many features I explored here, from accessibility checks to multi-mode inputs, would have taken far longer to prototype in VS Code. This showed me how valuable it is to pick the right tool for the right phase of the process.

Overall, OpenTone reinforced that learning new platforms, adapting my communication style, and blending visual and text-based workflows all make me a stronger designer stepping into this new space of VibeCoding.

What’s Next

OpenTone is just the beginning. My next step is to continue expanding its capabilities and exploring how far I can take this idea of an accessibility-first feedback generator.

One area I’m especially excited to pursue is bringing back part of the original MMI project vision: enabling the tool not just to suggest the right container and validate accessibility, but also to help craft the language itself. The goal would be to integrate a conversational assistant that acts like a content strategist, generating message copy that is clear, consistent, and accessible by default.

This evolution would make OpenTone a more complete system: guiding both how feedback is displayed and what feedback says. It’s the natural next step toward building a robust, accessibility-focused messaging framework that can support designers, writers, and developers alike.

📢 Want to learn more?

Let’s set up a time to chat, reach out via one of the links in the footer below.